Introduction

The EU AI Act is the world’s first comprehensive regulation of artificial intelligence. The first elements of the regulation begin to take effect as early as 2025, and full enforcement will come into effect gradually by 2026-2027. Companies operating in the European market regardless of industry must prepare for the new obligations. Especially if they use AI to process data, analyze documents or automate processes. The regulations aim to increase user security, transparency of system operations and reduce the risk of fraud.

What is the AI Act?

The AI Act introduces rules for the responsible use of artificial intelligence. Its foundation is a risk-based approach responsibilities increase as the potential threat increases. Because an AI system can pose them to citizens or organizations.

Risk levels in the AI Act

The regulation divides AI systems into four levels of risk, which can be illustrated by a pyramid.

- Unacceptable risks – systems that threaten human rights (e.g., mass biometric surveillance). They are banned.

- High risk – systems used in areas such as health, education, transportation, recruitment, critical infrastructure. They require certification, conformity assessment, full documentation and close human supervision.

- Limited risk – AI in user interactions, such as chatbots. Obligation to report on AI use and ensure transparency.

- Minimal risk – systems that do not pose risks to the user. They can be used freely.

AI Act who is affected in practice?

Although in theory many companies will be affected by the regulation, in practice only a few dozen percent of companies will have to comply with the expanded high-risk requirements. Most companies use AI tools that operate at low or minimal risk levels, which means no heavy regulatory burden, but transparency and compliance with the general principles of responsible data processing are still necessary.

Anonymization vs. AI Act

Anonymization of documents and data is becoming crucial for two reasons. First, it reduces legal risk if data is anonymized, not subject to the rigors of data protection. Second, the AI Act requires that data used to train models be legal, secure and minimized. Anonymization is therefore one of the most effective ways to comply with this requirement and still use the documents to build your own models.

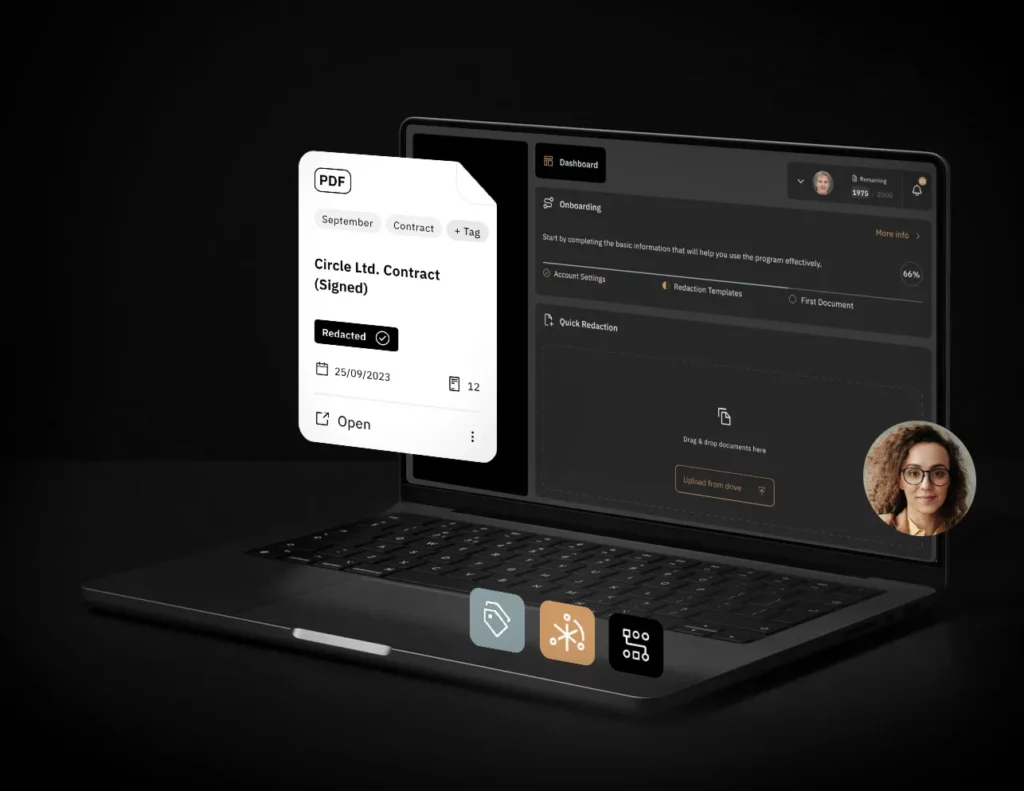

Does Bluur meet the requirements of the AI Act?

Blur falls into the category of minimal-risk systems, meaning it does not require the heavy compliance or certification characteristic of high-risk systems. Nonetheless, the solution implements AI Act-compliant best practices such as those that enhance transparency, process control and data security:

- Transparency of AI and human collaboration – the user always knows when the algorithm works and when it works.

- Logging operations – the system records who worked on the document (human or AI) and when.

- Lack of autonomous decisions – AI in Bluur never acts independently at key moments; the final decision is up to the user.

- Full documentation of use and monitoring – allows auditing, analysis of work and compliance with regulatory requirements.

The combination of these elements means that Bluur not only meets AI Act standards for low risk, but actually exceeds them.

Bluur as compliance support in training models

The AI Act also introduces obligations for training datasets. If a company wants to create or retrain AI models from its own documents, it must ensure that the data does not violate the privacy or rights of individuals. Bluur allows documents to be fully anonymized before they are used to train models. This allows companies to legally create their own domain-specific models while remaining in compliance with EU regulations.

Summary

The AI Act is a new development that is expected to increase user security and organize the market for artificial intelligence systems. Although the regulation will most affect systems operating in high-risk areas. It is all companies using AI that should prepare for greater transparency and accountability in data processing. Bluur is an example of a solution that fits naturally into these requirements thanks to its document anonymization, auditability and control over the process, and can be a real help in building AI Act compliance, especially in projects involving further data processing or model training.