Introduction

Data anonymization is not just a legal requirement – it is the foundation of effective privacy and information security. Here are two examples from different realities. British police officers struggling with manual redactions and US federal courts whose systems fell victim to a major cyber attack. Both cases show how a lack of automation, standardization and data minimization can lead to huge consequences.

Most common challenges and mistakes in editing/anonymizing data

Before the case studies, let’s identify the most important risk areas in the company.

- The lack of a uniform editorial policy makes it difficult to manage within the company.

- Inconsistent standards are a problem for various departments.

- Storing redundant data increases the risk of cyber attacks.

- Technology tools have not kept up with the growth of data and documents.

- Manual processes overload resources and delay audits.

Case study 1: UK police records redaction crisis

The scale of the problem

British police forces spend hundreds of thousands of hours a year manually redacting personal information from documents and records. That’s 770,000 hours a year, including 210,000 on cases that don’t go to prosecutors. This is a huge burn through of resources.

Technical and legal dilemmas

Police officers receive a variety of evidence, such as recordings, emails or documents. Each piece of evidence requires a different approach and editing. This slows down the investigation process.

The legal conflict stems from UK GDPR requirements and the obligation to disclose criminal evidence. On the one hand, overlooking data can result in very serious fines, which can run into millions of pounds. On the other hand, over-editing, on the other hand, can consequently block lawsuits or, worse, bring proceedings to a complete halt.

Consequences

Overstretched human resources cause investigators to lose valuable investigative time. Lack of consistency across divisions hinders trans-regional cooperation. The editing process has become a bottleneck, paralyzing the investigation system.

Possible solutions

Analysis indicates that AI tools reduce editorial time by 60-90%. They provide consistency, automation and relieve the burden on staff. However, it is necessary to implement standards, training, auditing and quality policies.

Case study 2: U.S. federal courts and data leakage in PACER/CM-ECF systems

Background of the incident

August 2025 saw one of the most serious cyber attacks on US judicial systems. Hackers took control of data in the PACER and CM-ECF systems. They gained access to sensitive information, including files and the identities of informants.

Causes and mechanisms of leakage

– Outdated infrastructure, lack of network segmentation and high-quality technical security. Systems were decentralized. Competitive security deployment processes were hampered, and patches had delays. Data minimization was violated, as systems stored a lot of sensitive data. There was a lack of clear retention limits and effective sanitization. There was a lack of automated redaction and protocols for removing metadata. Tools could not handle the scale of documents. A false sense of security prevailed. Some documents were only visually masked, data remained accessible and vulnerable to attack.

The impact of the incident

Exposure of sensitive documents, including personal data and court records, seriously threatens the integrity of judicial processes and the security of whistleblowers. Failure to effectively protect data at the system level can lead to serious breaches that negatively impact the entire justice system

Common lessons from both cases

- Manual processes vs. automation

In the UK, document editing was based on manual work, which generated huge time costs and risk of errors. In the US analogy, the lack of automation and effective tools led to a security disaster. Both cases show that the manual approach does not scale.

Conclusion: automation with quality control and auditing is necessary. - Policies and standards

The lack of consistent standards within an organization, such as the police, and the lack of mechanisms for data minimization and retention, as in the US, lead to chaos and increased risk.

Conclusion: For this reason, clear policies, both organizational and technical, should be implemented and regularly updated. - Scale of data processed and risk

In the UK, police had to deal with millions of documents and devices, leading to backlogs and blocked investigations. In the U.S., the scale of the attack was equally large and resulted in a breach of the judicial system at the national level.

The conclusion: the tools must be scalable, able to process large amounts of data, in various forms (PDF, audio, e-mail, video, etc.), and perform editing in an efficient and secure manner. - Consequences of errors: from delays to disasters

– In the UK, the consequences have been delays, loss of public trust, risk of erroneous proceedings or their blocking, and large staff costs. redactable.com

– In the U.S., the consequences were much more serious exposure of confidential data to leakage, directly threatening the security of whistleblowers and the integrity of legal proceedings. redactable.com

How to implement good anonymization and redaction practices – recommendations

Automation and AI to support editorial Use tools to process data quickly. Automatically identify sensitive information and then verify it manually.

Build and standardize editorial procedures Introduce clear editorial policies and regular training. Establish mechanisms for auditing, supervision, and escalation rules in case of concerns.

Data minimization as the foundation of systems architecture Identify necessary data and put limits on how long it can be stored. In addition, it’s a good idea to set up automatic mechanisms for deleting redundant data, as this will help keep processes more consistent and increase information security.

Scalable infrastructure and adequate security Systems must be prepared for the growing volume of data. High security standards and protection against attacks are necessary.

Training and legal awareness Regularly train employees on their rights and the limitations and risks of technology. This includes officers, officials and document administrators.

Recommendations- how to build a secure document processing process

- Step 1: Audit existing processes

Conduct a review of which areas need to be automated, where there are bottlenecks, which documents are processed and in which languages. - Step 2: Define redaction standards and policies

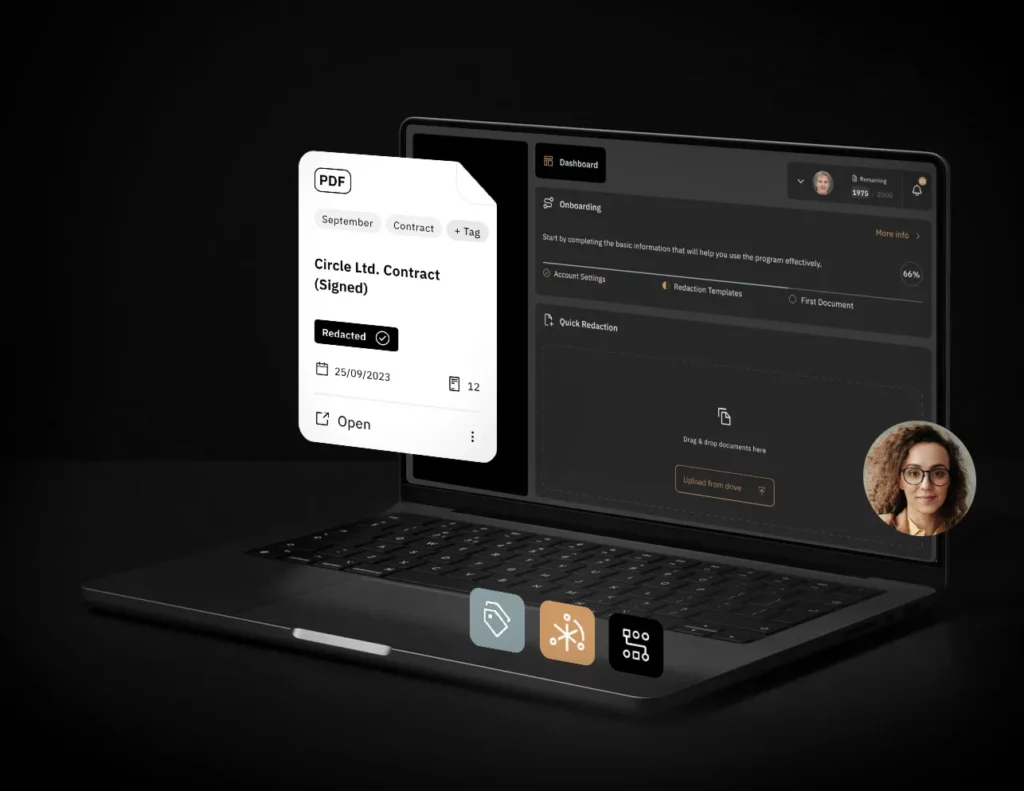

Determine what data must be redacted, what the sensitivity levels are, and put in place procedures for an auditable document processing path. - Step 3: Implement AI tool

Implementing Bluur.ai allows you to automate the process, minimizing manual errors, standardizing procedures, scaling workflows and easing the burden on staff. - Step 4: Training and adaptation of employees

It is therefore crucial to train teams on the new workflow, as this includes both the use of the tool and understanding the importance of redaction and data minimization. - Step 5: Monitor and continuously optimize

Regularly review the process, audit the effectiveness of redaction, update rules and monitor compliance with regulations and security standards.

How Bluur® can protect against such risks

Automated and precise editing of documents

Bluur.ai uses advanced AI/ML models to automatically identify sensitive data in documents and securely anonymize it, ensuring high efficiency and repeatability of the procedure.

High accuracy and multilingual support

The system offers more than 95% data redaction accuracy, which means that most sensitive information is detected and processed automatically. Because of its support for multiple languages, including Polish, English and German, Bluur.ai becomes an ideal solution as it meets the needs of organizations that process documents in different languages on a daily basis.

Security and compliance management

Bluur.ai provides a secure workflow that facilitates the introduction of editorial and auditable standards. Automation minimizes human error and relieves administrative resources. This helps avoid manual processes slowing down work. Document formats can be configured according to the needs of the organization. This introduces consistent audit procedures and regulatory compliance.

Scalability and integration

With its high efficiency in processing large volumes of documents, Bluur.ai is a great solution, especially when manual editing proves inefficient. As a result, Bluur.ai is effective in helping to minimize employee overload, and, moreover, it improves employee efficiency. Moreover, this is particularly relevant for units such as the police in the UK, as traditional manual processes very often lead to serious overload. Imagine a solution that processes hundreds or thousands of documents a day, maintaining a uniform standard of quality and security.

Support in data minimization and document lifecycle

By implementing Bluur.ai, organizations can implement retention and audit policies. The system automatically redacts data when uploading documents.What’s more, it also allows you to monitor the processing history, thus making it easier to meet regulatory requirements.

Summary

Lack of automation, standardization and data minimization can lead to operational roadblocks and delays. It entails the consumption of human resources and risks to security and privacy. This is shown by cases from the police in the UK and courts in the US.

In turn, in response to these challenges, editorial automation solutions such as Bluur offer an extremely effective counter. This is made possible primarily through high accuracy, as well as through scalability, integration and auditability. Consequently, this not only allows you to meet all regulatory requirements, but also to effectively secure processes and optimize operations.